CT scan peels back the layers of time to reveal the engineering within Intel’s iconic 386 CPU — exposing intricate pin mapping, hidden power planes, and more

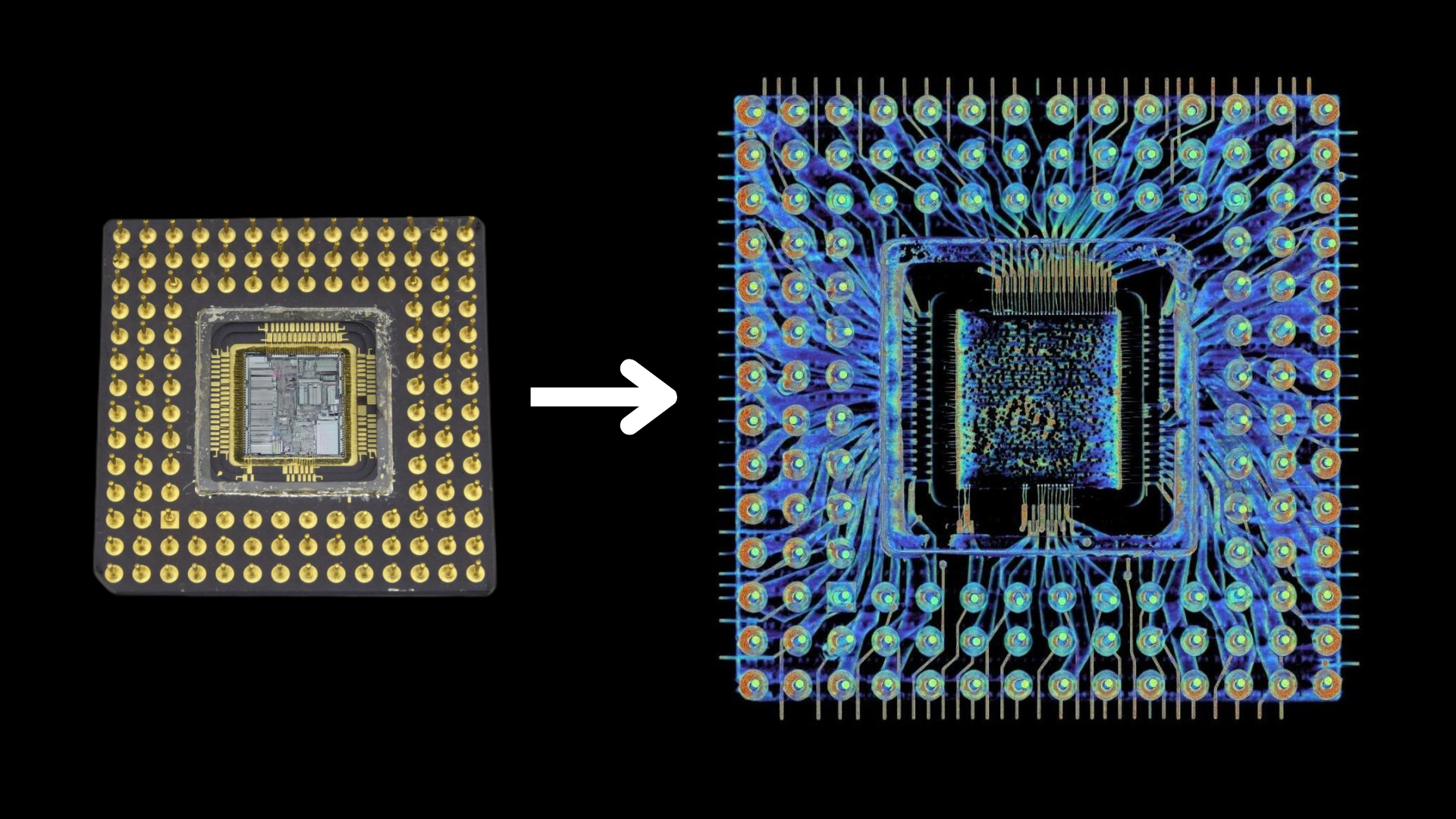

In today's world of bleeding-edge processes and packaging techniques, the Intel 386 doesn’t look like much—a grey ceramic slab with 132 gold pins, something you’d expect to find rattling around in an old parts bin at this point. But behind that dull façade lies a marvel of late-1980s chip packaging, the kind of engineering we've taken for granted. Using a CT scanner and some clever digital dissection, Ken Shirriff managed to explore every layer of this classic CPU without once touching a screwdriver or desoldering gun.

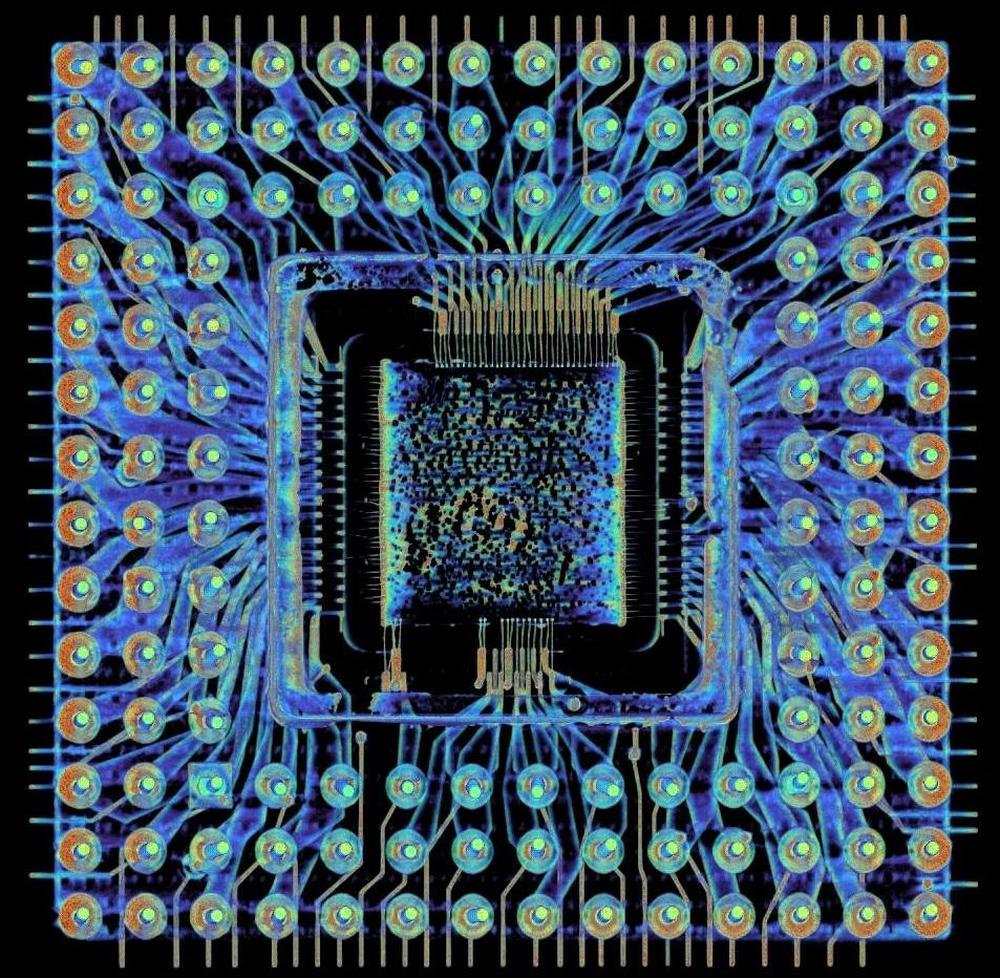

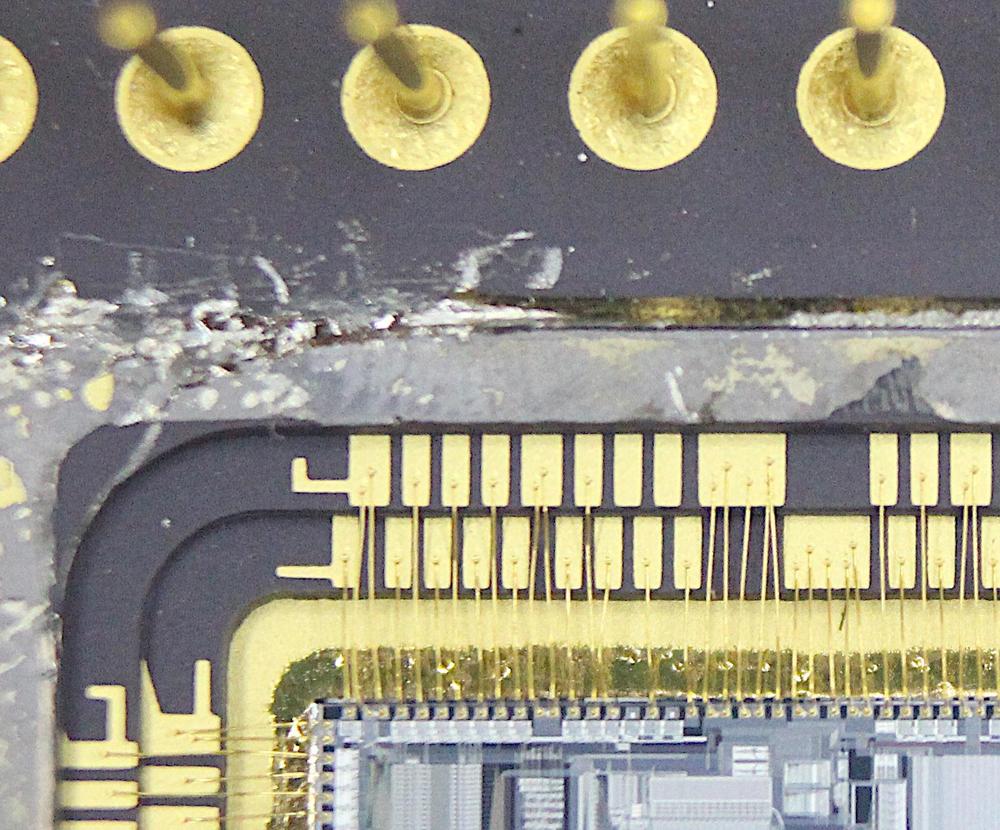

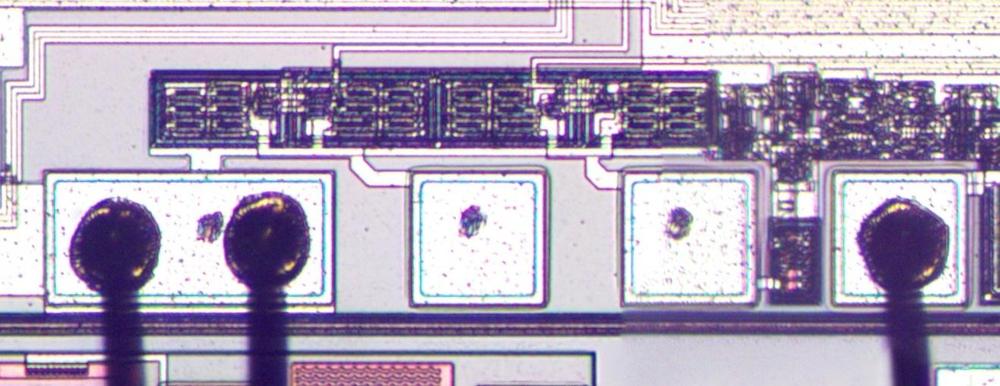

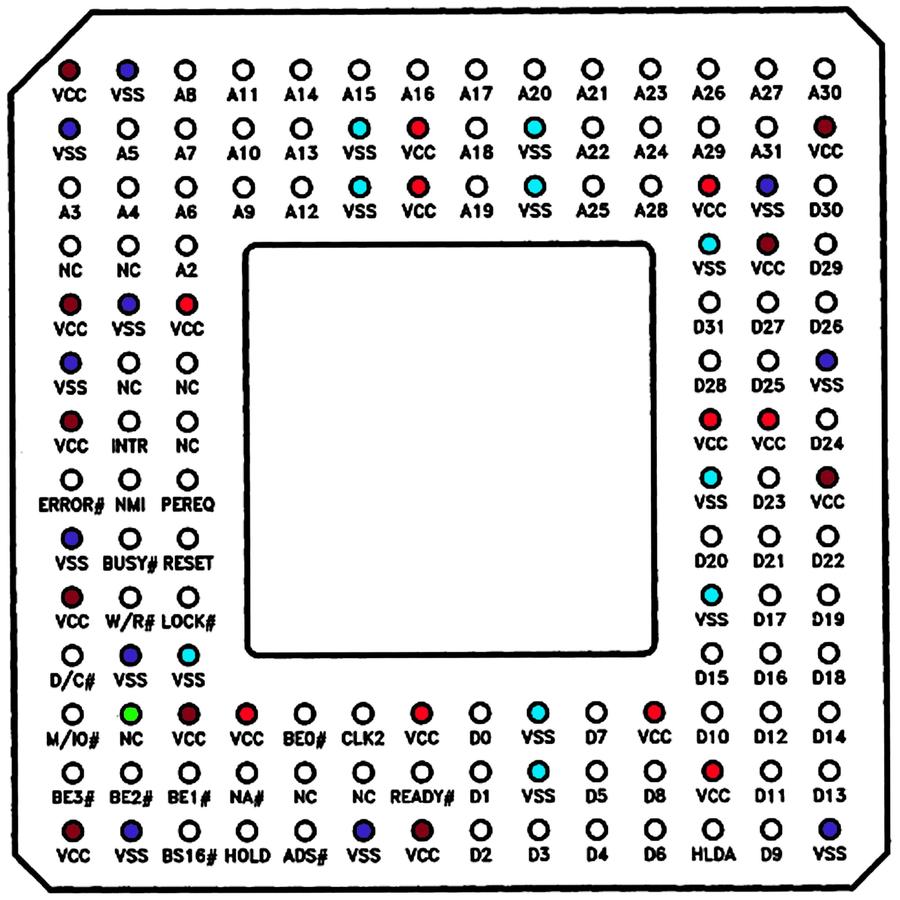

The scan produced hundreds of razor-thin X-ray slices, which were stitched into a 3D model you can spin, zoom, and digitally “peel” layer by layer. The first reveal is a halo of gold bond wires, each just 35 µm thick, far thinner than a human hair, radiating from the silicon die. These act as microscopic suspension bridges between the die’s pads and the package’s internal routing. Some carry simple data or control signals, others gang together—up to five on a pad—to handle the heavier demands of power and ground.

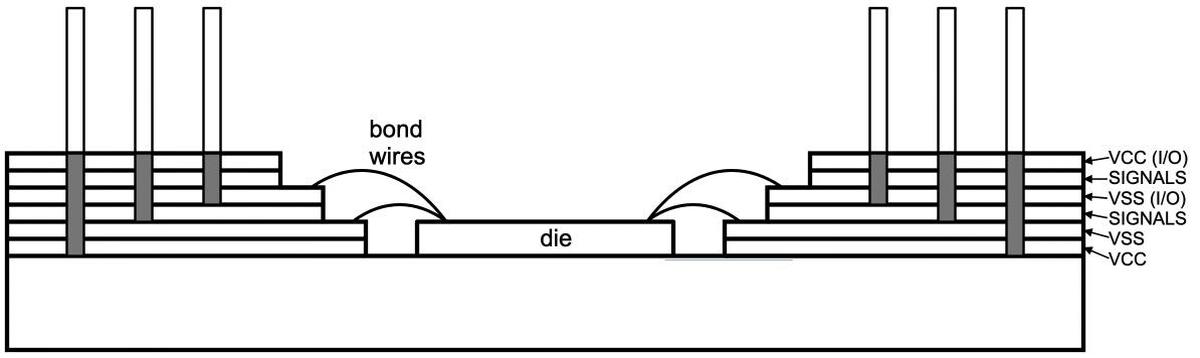

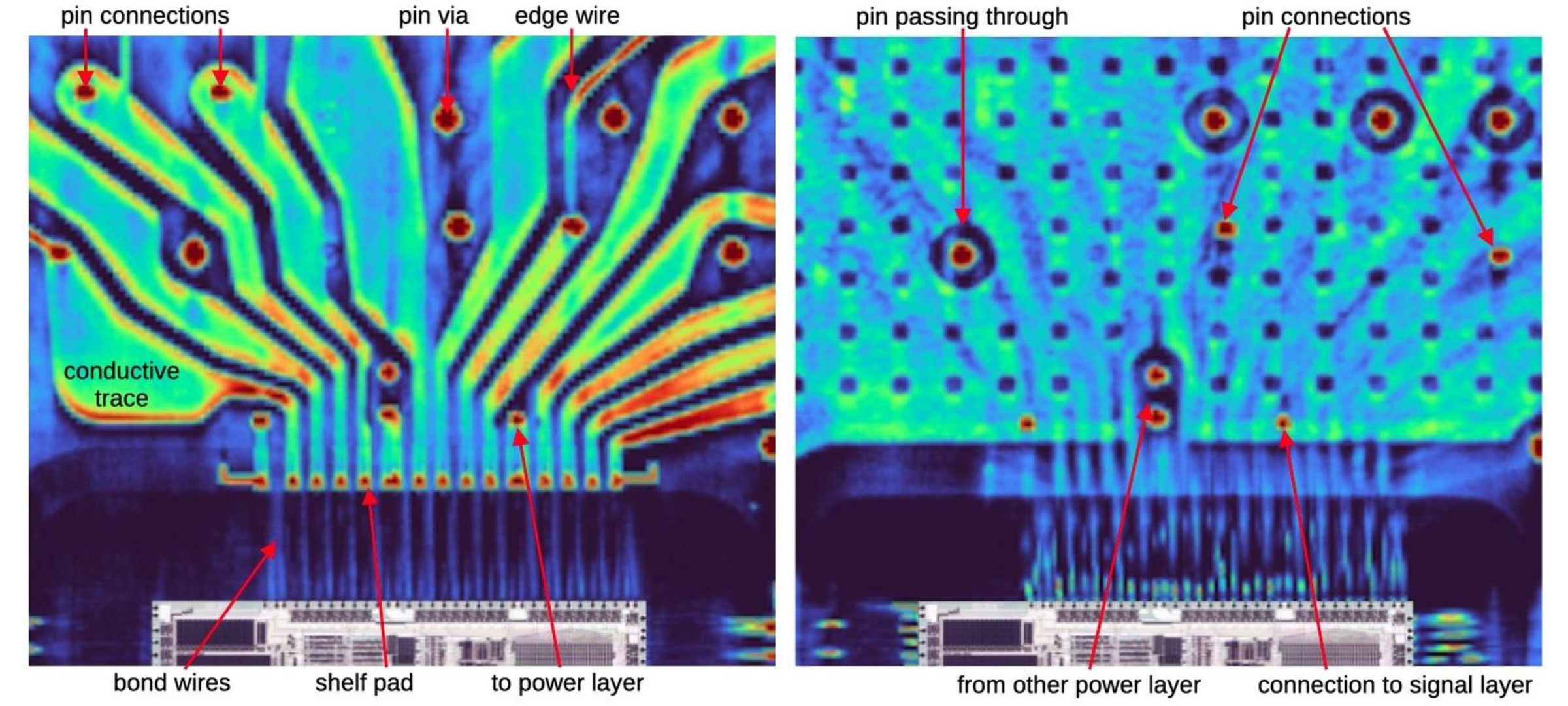

Pull back another layer, and the 386’s package shows its true sophistication: it’s essentially a six-layer circuit board in miniature. Two internal layers carry the signals while four dedicated copper planes deliver clean, stable power and ground to different sections of the chip. This “single-row double-shelf” bonding method, described in Intel’s own packaging literature attached below, was a way to cram maximum connectivity into a surprisingly compact footprint.

Digging deeper into the scans, Shirriff was able to map which pins connect where—some routing directly to I/O pads, others buried deep into the power and logic supply network. The CT imaging even caught details Intel probably never intended anyone to see, like the fine side wires used during manufacturing to electroplate the pins in gold. These plating contacts, barely visible spikes along the package edge, were confirmed when he lightly sanded the ceramic to match the scans.

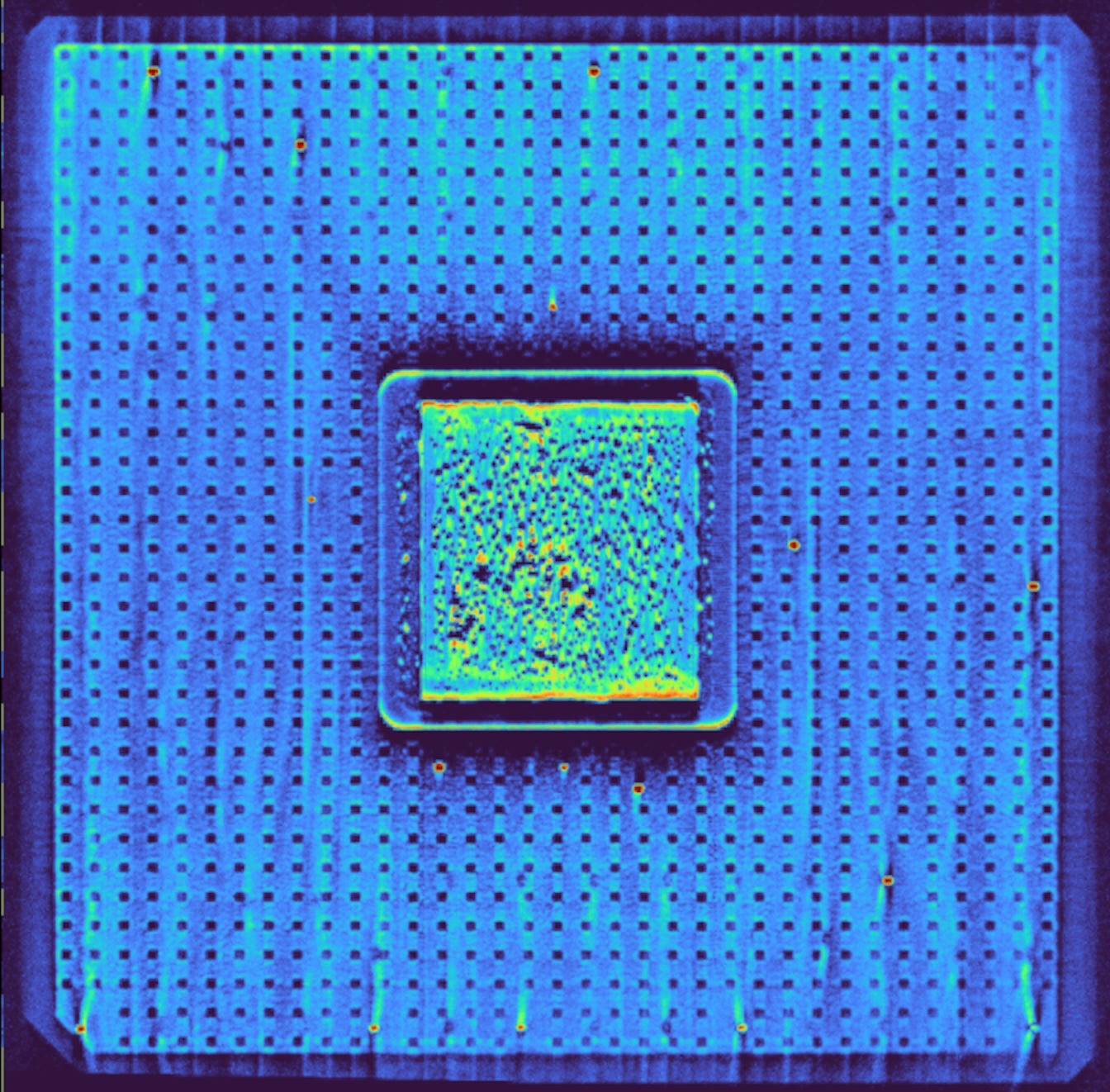

Moving on, the scans compare the signal and power layers side-by-side, which reveals the design’s sophistication. The signal layers are like a tangled web of thin copper paths, weaving between tiny vias, carrying all the data and control signals across the chip. They’re intricate and precise, designed to navigate the complex logic inside.

Power layers, by contrast, are almost solid sheets of copper, with only a few holes where the bond pads and vias break through. These planes deliver clean, steady power and ground to the chip, making sure everything runs smoothly without interference or noise that could cause glitches.

Beneath the silicon die, the X-ray reveals a bright patch—that’s silver-filled epoxy. This isn’t just glue; it’s a carefully chosen material that pulls heat away from the chip while also providing a direct, low-resistance ground connection. It’s a subtle but vital part of how the package keeps the 386 stable and reliable under load.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

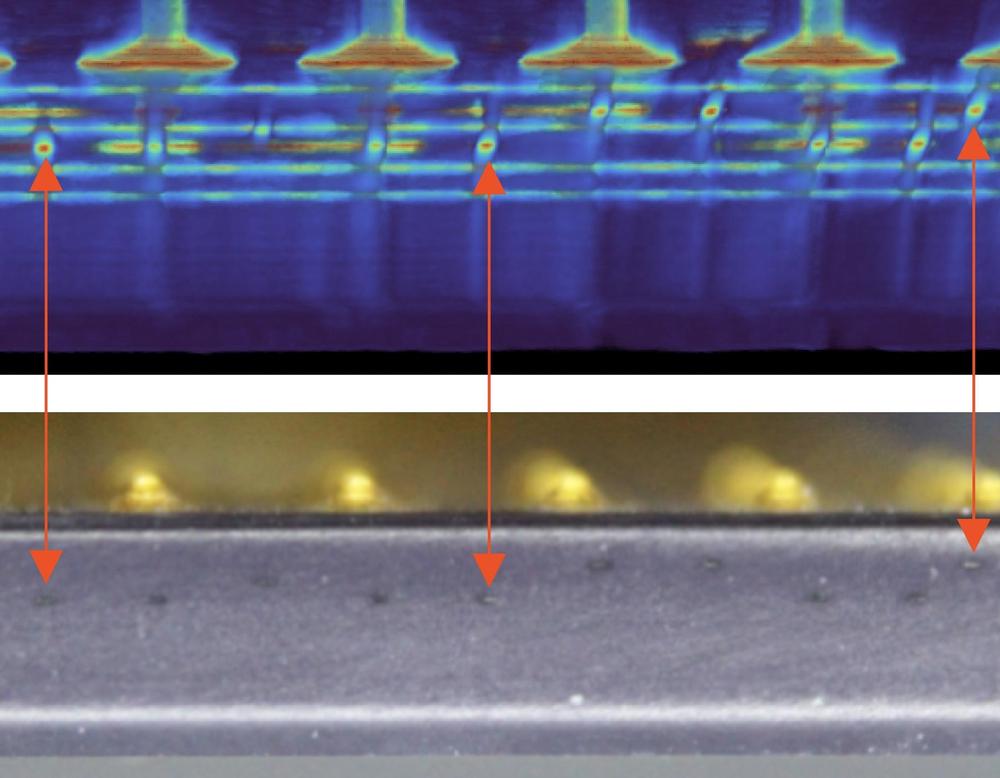

The packaging acts like a bridge across massive differences in scale. Inside the silicon die, the tiniest features are about 1 micrometer wide—barely visible even under a microscope. These then expand to wiring on the die around 6 micrometers, which connect to bond pads spaced about a quarter of a millimeter apart.

Those bond pads link to package pads spaced at half a millimeter, and finally to the pins on the bottom of the chip, spaced 2.54 millimeters apart. It’s an incredible zoom-out—roughly 2,500 times—from the microscopic core logic to the bulky socket pins you can see and touch.

If you lined up one of the 386’s bond wires next to the socket pins it connects to, the size difference would be absurd. That’s the beauty of this packaging: it not only protects the die, but transforms it from a delicate, microscopic device into a robust, installable part you could—in theory—swap in and out of a motherboard by hand.

Moreover, the 386 package includes eight pins labeled as “No Connect” (NC), meaning they aren’t wired to the motherboard and appear unused. Inside the die, most of these NC pads are nearly connected, with internal wiring and pad spots ready for bond wires. Intel left these as placeholders, likely for testing or debugging during manufacturing. Interestingly, one NC pin is actually connected to the die and functions as an output, hinting at hidden signals Intel kept under wraps. Refer to the second gallery image to identify the NC pins on the die.

For enthusiasts, we highly urge you to check out the original blog post to learn more; it goes into far more detail for those intrigued and looking for more technicalities. For Intel, this ceramic multi-layer approach wasn’t just about elegance; it finally broke through the company's early obsession with keeping pin counts low—a relic of Intel management's 'bizarre belief that chips should never have more than 16 pins', according to Shirriff —which limited the potential of their first processors from the 70s.

The 386 marked a culmination of Intel's move to fully embrace high pin counts and advanced packaging, recognizing that complex chips demanded sophisticated, multi-layered ceramic packages to handle power, signal integrity, and heat. This shift was pivotal, enabling the performance leaps that made modern computing possible.

It’s easy to forget now, but the 386 was the CPU that made contemporary multitasking PCs possible, and its packaging was as important to that leap as its transistor count. Shirriff’s CT scan work doesn’t just satisfy curiosity; it’s a reminder that in the early microprocessor wars, Intel invested some of the world’s best engineering talent into what seemed like the most mundane aspect—"the box it comes in." Only time will tell if the once-iconic innovation powerhouse will return to its former glory.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

abufrejoval Somehow my 386s must have been too precious to leave lying around, they went with the systems themselves to family and friends.Reply

But I still have a 486 or two, because that's where the crazy replacements started and an 'ordinary' 33MHz part would get replaced with a DX2, and then several generations of AMD's DX3/4 or Kx parts, which hid quite a bit of extra functional scope and computing power in a form factor that was largely unchanged.

Most importantly those became noisy because they needed active cooling!

All those 80386 and 80486 were still fullly passive, just ceramics or even plastic in plain view, with only hard disks and power supplies playing a bit of a mid-range tune. -

Sam Hobbs The article says:Reply

the 386 was the CPU that made contemporary multitasking PCs possible

Was the Motorola 68000 series not capable of multitasking at the time? Also, I assume that IBM's 801-based microprocessors were capable of multitasking. They began use about same the time as the Intel 80386.

I wonder if the CT scans and other techniques are capable of detecting hidden instructions and related features. -

MooreBreaker ReplySam Hobbs said:The article says:

Was the Motorola 68000 series not capable of multitasking at the time? Also, I assume that IBM's 801-based microprocessors were capable of multitasking. They began use about same the time as the Intel 80386.

I wonder if the CT scans and other techniques are capable of detecting hidden instructions and related features. -

MooreBreaker I find all these type of "first to ****" claims arbitrary for the most part.Reply

Technically the 68000 is a 32 but architecture. And yet most computers/consoles only claim 16 bit because of course it was also 16 bits and mainly coded for 16 bits.

So you know. What's the first is meaningless a lot of the time. You can be the first to create something, but what does that mean I'd the capabilities ain't used? -

Alex/AT Reply

It's not about bits and register/bus width. 80386 is all about MMU (physical address space) and privilege levels / exceptions (command set) facilitating isolation and segmentation between processes. As 68000 lacks these, it can only be used for carefully designed cooperative multitasking (even if timeframe preemption is facilitated). Basically if processes can easily damage each other memory or render OS level inoperative, it's the end.MooreBreaker said:I find all these type of "first to ****" claims arbitrary for the most part.

Technically the 68000 is a 32 but architecture. And yet most computers/consoles only claim 16 bit because of course it was also 16 bits and mainly coded for 16 bits.

So you know. What's the first is meaningless a lot of the time. You can be the first to create something, but what does that mean I'd the capabilities ain't used?

And even if we are taking just widths, no, 68000 ALU is 16-bit internally, it's external data bus is also 16-bit. Has nothing to do with multitasking though. -

Tanakoi Reply

IBM coded OS/2 to task switch on the 80286 in real time. But the performance and memory overhead of multitasking OSes were prohibitive for this chip.Sam Hobbs said:Was the Motorola 68000 series not capable of multitasking at the time? Also, I assume that IBM's 801-based microprocessors were capable of multitasking. They began use about same the time as the Intel 80386.

Today, we're happy to see a 20% performance increase on a new CPU release, but 286 -> 386 (plus a simple 32-bit recompile) made many programs run 10X or more faster than before. It was literally the largest performance leap since the dawn of the PC. -

Alex/AT Yes. 80286 has privilege rings, and has segmentation but not paging in the MMU.Reply

It is indeed the first technically multitasking-capable CPU in the series.

The lack of paging and 16-bit only segmentation quickly tarnished its usability for one though.

There were also some issues with privilege rings isolation and legacy compatibility issues that got fixed in 80386.

So yeah, while it is certainly technically capable, it's more of an early beta for the purpose. -

ferdnyc Reply

Well, I don't recall the article saying anything about #FRIST!!s, the quote was "the 386.... made contemporary multitasking possible". Which is pretty undeniable, broadly. 68000-based platforms of the era, like the Amiga, Atari ST, and Macintosh, didn't really have true preemptive multitasking with memory protection. (Macs wouldn't really have capable CPUs until their PowerPC machines, and even with those MacOS 6/7/8/9 was never really meant for multitasking.)MooreBreaker said:I find all these type of "first to ****" claims arbitrary for the most part. -

abufrejoval Intel really never innovated with the x86 series CPUs.Reply

True innovation was typically where Intel failed, starting from the 80432, and continuing with their i960 and "Cray on a chip'" i860 designs.

The 80286 IMHO was essentially a PDP-11 with the MMU included, a PDP-11/34 if you want, and the 80386 followed the VAX 780, perhaps one of the reasons why Dave Cutler felt so much at home with creating WNT (Windows NT) which was the VMS (V++M++S++) successor at Microsoft.

Yes, the 80286 had a true MMU able to handle segementation faults just like the PDP-11 with an MMU and thus pre-emptive multi-tasking, while the 680x0 s series required an external MMU until the 68030. Thus it was theoretically perfect to run Unix (and in practice I ran Microport's System V release 2 on it), but I'd say the reason for its downfall was missing backward compatibility with real-mode DOS. And while its microcode implemented task switches were incredibly slow (something that Linus Thorvalds famously ignored), I'm not sure that was their downfall.

Instead I'd attribute it to the fact that the PDP to VAX parallel transition via the 80386 only took two years as a copycat, which was modelled on the VAX with paging/virtual memory support and included a virtual 8086 mode for backward compatibility (but excluded virtual 80386 until the SL variants for notebooks, which Mendel Rosenblum & friends exploited to create VMware!).

Unix was really born on segmented PDP-11 machines very much like the 80286. It was BSD which essentially propelled Unix into the virtual memory domain on 32-bit machines, perhaps the last real Unix redesign and much more like Multics, which the Unix inventors considered such a massive failure, they went to files instead of virtual memory ranges as their major abstraction.

Their attempt to hide the short-sightedness of that abstraction called Plan-9 never really left the labs, much to their everlasting chagrin.

Well, Dennis Ritchie is beyond pain now, but Ken Thompson still suffers...

Or perhaps Bill Jolitz (386BSD) alongside Fernando Corbató (of Multics fame) is eternally singing to Dennis about what he missed to do with his life-time's achievement on Earth; heavens just might be a bit of a nightmare for nerds, as Stanislaw Lem has so fabulously described.

P.S.

Jochen Liedke, today perhaps a senior angel of L4 fame, expounded why the simple jump to task state segments, which motivated 16-year old Linus Torvalds to try writing a Unix functional equivalent with perhaps a single page of code, turned out to be a catastrophically slow microcode monster on actual use: Lynn and Bill Jolitz also showed so much more sense with many more instructions, but demonstrably much fewer CPU cycles on 386BSD, which led Linus to accept that he was perhaps the worst kernel developer living and thus paved the way for Linux supremacy by open sourcing to those in the know of OS design.

IMHO even with all his cursing, Linus truly excells with his social code, manifested in his decision making, not computer source code.

AMD64 cut much of the 80286 crap, starting with my most beloved AAA instruction of Cobol/BCD fame (hi Grace!), which always came first in x86 ISA books, long before the JMP to task state segments, which spawned Linux.

History: if you don't know, you're much more likely to repeat all its mistakes. -

Tanakoi Reply

Yes, that explains why only a tiny handful of these x86 series CPUs were ever produced before the architecture died out entirely -- and today, it's nearly impossible to find a machine even capable of running x86 code. And Intel's 100,000+ patents on x86 architecture are simply an urban myth.abufrejoval said:Intel really never innovated with the x86 series CPUs.